Artificial Intelligence and Inclusion

Dr Georgina Curto recently defended her doctoral thesis, entitled Artificial Intelligence and Inclusion: An Analysis of Bias Against the Poor. She conducted her thesis within the Department of Ethics at the IQS School of Management and was supervised by Dr Flavio Comim, head of the Social, Economic & Ethics (SEE) research group at the IQS School of Management.

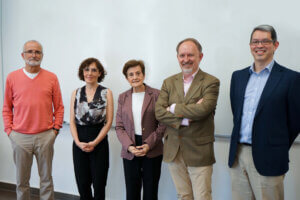

Dr Adela Cortina, professor emeritus with the University of Valencia, Dr Ramón López de Mántaras, research professor at the Artificial Intelligence Research Institute (IIIA) at CSIC, and Dr Txetxu Ausín Díez, head scientist with the Philosophy Institute at CSIC, were the jury members appointed for the defence of Dr Curto’s doctoral thesis.

In 2017, the philosopher Dr Adela Cortina coined the term “aporophobia” to describe why we embrace and welcome the rich while the poor are ignored, rejected, and sometimes suffer from verbal and physical aggression. Ending poverty is the first Sustainable Development Goal (SDG) established by the United Nations for its 2030 agenda. In addition, the European Commission’s Ethics Guidelines for Trustworthy Artificial Intelligence (AI) form part of a regulatory framework for basic human rights in the digital environment.

In her doctoral thesis, Dr Curto placed special emphasis on this type of discrimination in AI systems. Such systems are trained through the historical data provided by the behaviour of online users, thus frequently replicating and aggravating existing biases in our society. This situation is especially worrying when AI is used in areas such as justice, health, or education, especially since the results offered by AI are regularly overvalued.

A study framework on aporophobia and the use of AI

There is scant literature describing how to identify and mitigate bias towards the poor (aporophobia) in the use of AI. Within this context, Dr Curto’s thesis presents a conceptual framework that seeks to explain the AI bias phenomenon from a multidisciplinary perspective, including the psychological mechanisms that describe bias as a part of human cognition. Her thesis also represents a pioneering work that provides empirical evidence about the existence of aporophobia in AI systems and social networks, measuring bias against the poor through the use of “word embeddings” or vector representations of the semantic meanings of words, extracted from pre-trained models from Google News word2vec, Twitter, and Wikipedia GloVe.

Considering the complexity of interpreting “justice in AI models,” her thesis suggests an AI bias mitigation process that works alongside stakeholders (including developers and users) to share responsibility, reach agreements, and openly communicate the often imperfect solutions in terms of equity.

Finally, Dr Curto proposed an AI simulation model to show whether a decrease in aporophobia would imply a reduction in poverty levels (the first SDG). The aim of this research going forward is to provide an alternative way to fight poverty, with a focus not only on traditional redistribution policies, but also on the mitigation of discrimination.

AI can be an effective aggravator of discrimination against the most vulnerable people while also being an extremely efficient and useful tool for action against discrimination. Dr Curto’s thesis provides a conceptual framework and applied tools to produce fairer development of AI systems and proposes the use of AI to make the world a better place.

Her thesis was conducted in collaboration with the Artificial Intelligence Research Institute (IIIA) at CSIC and with the eVida research group at the University of Deusto.

It received funding under the Aristos Campus Mundus programme, supported by Ramon Llull University, the University of Deusto, and Comillas Pontifical University.